Six Tips For Deepseek Success

페이지 정보

본문

Wiz Research informed deepseek - simply click the next internet site - of the breach and the AI company locked down the database; subsequently, DeepSeek AI products should not be affected. Some consultants dispute the figures the corporate has supplied, however. The high-load experts are detected based mostly on statistics collected during the online deployment and are adjusted periodically (e.g., each 10 minutes). Improved fashions are a given. Before we perceive and compare deepseeks efficiency, here’s a fast overview on how fashions are measured on code specific tasks. One thing to take into consideration because the method to constructing quality coaching to teach individuals Chapel is that in the meanwhile the best code generator for various programming languages is Deepseek Coder 2.1 which is freely accessible to make use of by people. Let’s just focus on getting a fantastic model to do code generation, to do summarization, to do all these smaller tasks. It’s January 20th, 2025, and our great nation stands tall, able to face the challenges that outline us. "As organizations rush to undertake AI instruments and companies from a growing number of startups and suppliers, it’s important to remember that by doing so, we’re entrusting these companies with sensitive data," Nagli stated. Its V3 mannequin raised some consciousness about the corporate, though its content restrictions round delicate subjects about the Chinese authorities and its leadership sparked doubts about its viability as an industry competitor, the Wall Street Journal reported.

Wiz Research informed deepseek - simply click the next internet site - of the breach and the AI company locked down the database; subsequently, DeepSeek AI products should not be affected. Some consultants dispute the figures the corporate has supplied, however. The high-load experts are detected based mostly on statistics collected during the online deployment and are adjusted periodically (e.g., each 10 minutes). Improved fashions are a given. Before we perceive and compare deepseeks efficiency, here’s a fast overview on how fashions are measured on code specific tasks. One thing to take into consideration because the method to constructing quality coaching to teach individuals Chapel is that in the meanwhile the best code generator for various programming languages is Deepseek Coder 2.1 which is freely accessible to make use of by people. Let’s just focus on getting a fantastic model to do code generation, to do summarization, to do all these smaller tasks. It’s January 20th, 2025, and our great nation stands tall, able to face the challenges that outline us. "As organizations rush to undertake AI instruments and companies from a growing number of startups and suppliers, it’s important to remember that by doing so, we’re entrusting these companies with sensitive data," Nagli stated. Its V3 mannequin raised some consciousness about the corporate, though its content restrictions round delicate subjects about the Chinese authorities and its leadership sparked doubts about its viability as an industry competitor, the Wall Street Journal reported.

It’s known as DeepSeek R1, and it’s rattling nerves on Wall Street. There is a draw back to R1, DeepSeek V3, and DeepSeek’s other fashions, however. But R1, which got here out of nowhere when it was revealed late last year, launched final week and gained vital consideration this week when the corporate revealed to the Journal its shockingly low value of operation. The corporate mentioned it had spent simply $5.6 million powering its base AI mannequin, compared with the a whole lot of millions, if not billions of dollars US companies spend on their AI technologies. The company costs its products and services well beneath market worth - and provides others away free of charge. Released in January, DeepSeek claims R1 performs in addition to OpenAI’s o1 mannequin on key benchmarks. If DeepSeek V3, or an identical model, was launched with full training information and code, as a real open-supply language mannequin, then the cost numbers can be true on their face worth. DeepSeek-R1 achieves efficiency comparable to OpenAI-o1 across math, code, and reasoning duties. Being a reasoning mannequin, R1 effectively reality-checks itself, which helps it to avoid a few of the pitfalls that usually journey up fashions.

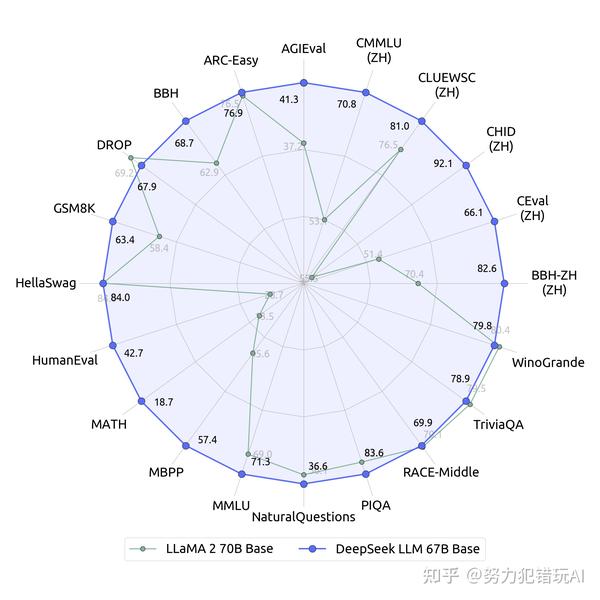

Do they do step-by-step reasoning? The training regimen employed massive batch sizes and a multi-step studying charge schedule, guaranteeing strong and efficient learning capabilities. We delve into the examine of scaling laws and present our distinctive findings that facilitate scaling of large scale models in two generally used open-source configurations, 7B and 67B. Guided by the scaling laws, we introduce DeepSeek LLM, a project dedicated to advancing open-supply language models with a long-time period perspective. AI is a power-hungry and value-intensive expertise - so much so that America’s most powerful tech leaders are buying up nuclear energy firms to offer the mandatory electricity for their AI fashions. DeepSeek shook up the tech business over the last week as the Chinese company’s AI fashions rivaled American generative AI leaders. Sam Altman, CEO of OpenAI, last year mentioned the AI trade would wish trillions of dollars in investment to help the development of excessive-in-demand chips wanted to power the electricity-hungry data centers that run the sector’s complex models.

So the notion that related capabilities as America’s most highly effective AI models could be achieved for such a small fraction of the cost - and on much less capable chips - represents a sea change within the industry’s understanding of how a lot funding is needed in AI. I believe this speaks to a bubble on the one hand as every govt is going to want to advocate for more investment now, but things like DeepSeek v3 additionally factors towards radically cheaper training in the future. The Financial Times reported that it was cheaper than its friends with a value of 2 RMB for every million output tokens. The DeepSeek app has surged on the app retailer charts, surpassing ChatGPT Monday, and it has been downloaded practically 2 million instances. In keeping with Clem Delangue, the CEO of Hugging Face, one of the platforms hosting DeepSeek’s models, builders on Hugging Face have created over 500 "derivative" fashions of R1 that have racked up 2.5 million downloads combined. Whatever the case could also be, developers have taken to DeepSeek’s models, which aren’t open source because the phrase is commonly understood however are available underneath permissive licenses that permit for industrial use. DeepSeek locked down the database, however the invention highlights potential dangers with generative AI fashions, particularly worldwide projects.

- 이전글20 Important Questions To Ask About Bariatric Wheelchair For Sale Before You Buy Bariatric Wheelchair For Sale 25.02.02

- 다음글20 Questions You Should Always ASK ABOUT Bariatric Wheelchair Before You Buy Bariatric Wheelchair 25.02.02

댓글목록

등록된 댓글이 없습니다.